Subject Guides

Systematic Review

- Home

- Step 0: Pre-Review Tasks

- Step 1: Develop a Systematic Review Protocol

- Step 2: Choose Systematic Review Tools

- Step 3: Develop a Systematic Search Strategy

- Grey Literature This link opens in a new window

- Step 4: Register a Protocol

- Step 5: Run Finalized Searches

- Step 6: Standardized Article Screening

- Step 7: Appraise the Quality of the Included Studies

- Step 8: Data Extraction

- Step 9: Synthesize the Results

- Resources for Systematic Reviews in the Social Sciences

- Collaboration for Environmental Evidence: Systematic Reviews in Environmental Policy & Practice This link opens in a new window

- Systematic Reviews in Business This link opens in a new window

Critical Appraisal

"Critical appraisal is the process of carefully and systematically examining research to judge its trustworthiness, and its value and relevance in particular context. It is an essential skill for evidence-based medicine because it allows clinicians to find and use research evidence reliably and efficiently"

Burls, A. (2009). What is critical appraisal? In What Is This Series: Evidence-based medicine. Available online at What is Critical Appraisal?

The basics of Critical Appraisal:

- Validity: measured by research design, method and procedure

- Trustworthiness:

- Clear statement of findings

- Precise documentation of results

- Rigorous data analysis

- Analysis of how findings fit within established research

- Value & relevance: Are results applicable to the clinical question and/or population of interest?

Why is Critical Appraisal Important:

- Before applying evidence to a clinical problem it is vital that the researcher critically evaluate:

- Study design utilized to determine the research evidence

- Possible bias and reliability of evidence presented

- Determine the practical relevance of the research findings

Source: CASP

LATITUDES Network: Appraisal tools library

LATITUDES is a library of validity assessment tools for use in evidence syntheses. Researchers can register tools under development. The website also provides access to training resources.

Validity assessment tool

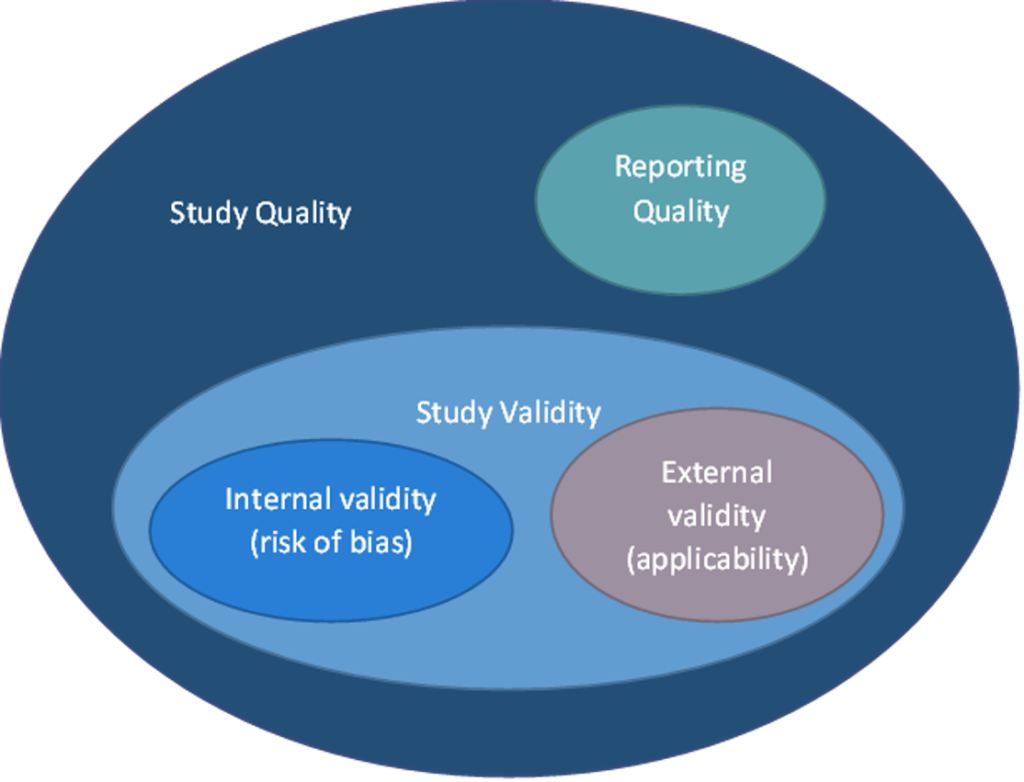

Study “quality” is not well defined but is generally considered to have three main components:

-

- Internal validity/Risk of bias: Poor design, conduct or analysis can introduce bias or systematic error affecting study results and conclusions

- External validity: The applicability of the study findings to the review question or the generalizability of findings to real-world settings and populations

- Reporting quality: How well the study is reported—it is difficult to assess other components of study quality if the study is not reported with the appropriate level of detail. However, poor reporting alone does not necessarily mean that the validity of the study is compromise

What is a validity assessment tool?. Latitudes Network. (2023, September 1). https://www.latitudes-network.org/library/what-is-a-validity-tool/

Critical Appraisal Checklists

- CASP Checklists

This set of eight critical appraisal tools are designed to be used when reading research. CASP has appraisal checklists designed for use with Systematic Reviews, Randomised Controlled Trials, Cohort Studies, Case Control Studies, Economic Evaluations, Diagnostic Studies, Qualitative studies and Clinical Prediction Rule.

- JBI Critical Appraisal Tools

JBI’s critical appraisal tools assist in assessing the trustworthiness, relevance and results of published papers.

Critical Appraisal Process

Critical appraisal is a systematic process of analyzing research to determine the strength of the validity, reliability and relevance.

- What is the focus of the study?

- The focus will address the population, intervention and outcome

- What type of study was completed?

- Is the study design matched with the domain of the research question?

- What are the study characteristics?

- Use the PICO question format to determine the study characteristics:

- What are/is the Patient/Population/Problem? How were the participants selected?

- What intervention/treatment/test is being studied?

- Does the study compare the intervention to a control group?

- What outcomes are being assessed? Are the outcomes objective, subjective, surrogate?

- Use the PICO question format to determine the study characteristics:

- How did the researchers address bias within the study?

- Were the study participants randomly assigned to study groups?

- Was the randomization process double or triple blind?

- Were the study groups similar at the beginning of the study?

- What was the percentage of participant attrition? How many left the study prior to completion?

- Apart from the tested intervention, were the groups of the study treated equally?

- What are the study results? Are the results valid?

- Were the effects of the study provided comprehensively?

- What outcomes were measured? Were they clearly specified?

- Would the drop out rate effect the study results?

- Is there any missing or incomplete data?

- Are the potential sources of bias identified?

- How large was the treatment/intervention effect?

- Do the authors provide access to the raw data?

- Is this study relevant to your population, intervention and outcome?

- Are the results generalizable?

- Compare study participants to your population of study. Are the outcome measures relevant?

- Is this intervention applicable to your patient/population? (Including patient values)

- Would this intervention provide greater value to the your patient/population?

- Would participant differences alter the outcomes?

- Are there limitations in this study that would impact the outcomes desired?

University of Canberra library. (2021). Module 3: Appraisal. Evidence-based practice in health. https://canberra.libguides.com/c.php?g=599346&p=4149244

Types of Bias

Bias can occur in the design and methodology of the study and this can distort the study's findings. The presence of bias may prevent the study from accurately reflecting the true results of the study. No study is completely free from bias. Through critical appraisal, the reviewer should systematically check that the researchers have minimized and acknowledged all forms of bias.

Selection bias: Differences in the characteristics of the intervention and the comparator groups. Blind random placement of subjects within the intervention and control groups will reduce the risk of selection bias.

Performance bias: Differences between groups in the care that is provided, or in the exposure to factors other than the interventions of interest. Blinding of participants, researchers and outcome assessors will reduce the risk of performance bias.

Attrition bias: Loss of number of participants in either the control or intervention group through withdrawal or drop out. This will impact the comparison between the intervention and control groups. Enrollment of a greater number of study participants than deemed necessary can help to prevent the loss of needed data points and compensate for expected withdrawals.

Detection bias: Differences between groups in how outcomes are determined. To minimize detection bias, the methodology applied to the intervention and control groups must be equivalent with the exception of the measured intervention. Blinding of participants, researchers and assessors will also minimize detection bias.

Reporting bias: Differences between reported and unreported findings. All of the data collected during a study must be presented in an objective manner regardless of results to minimize reporting bias.

For more information: Cochrane Training Handbook for Assessing Risk of Bias

- Last Updated: Nov 24, 2025 8:14 AM

- URL: https://libraryguides.binghamton.edu/systematicreview

- Print Page